This document provides a brief intro of the usage of builtin functions and detectors designed for each App.

Grip Detection

Suction Detection

Inner Grip Detection

1. Output format

Field |

Description |

inner_grip |

num_grip x 4 where a grip g=[xc, yc, zc, w, score] |

best_inner_ind |

best grip index of inner grips |

best_n_inner_inds |

top n indexes of inner grips |

best_inner |

target inner grip selected from all inner grips |

im |

display grip on the input image |

2. Create and Load Detector

Using the default Kpick’s detector

from kpick.pick.inner_grip_detector import get_inner_detector_obj, InnerGripDetector detector = get_inner_detector_obj()(cfg_path=cfg_path)

Note

Please refer configs/inner_outer_grip.cfg

3. Modify and Load Detector

Extending the default Kpick’s detector

from kpick.pick.inner_grip_detector import get_inner_detector_obj, InnerGripDetector class AppDetector(InnerGripDetector): def new_function(self): print('new function') detector = get_inner_detector_obj(Detector=AppDetector)(cfg_path=cfg_path)

4. Demo on single RGB-D image

import cv2

from ketisdk.vision.utils.rgbd_utils_v2 import RGBD

# load model

detector = get_inner_detector_obj()(cfg_path='configs/inner_grip.cfg')

# load image

rgb = cv2.imread('data/test_images/inner_grip_rgb.png')[:, :, ::-1]

depth = cv2.imread('data/test_images/inner_grip_depth.png', cv2.IMREAD_UNCHANGED)

rgbd = RGBD(rgb=rgb, depth=depth, depth_min=600, depth_max=800,

denoise_ksize=detector.args.sensor.denoise_ksize)

rgbd.set_workspace(pts=[(424, 176), (976, 180), (971, 559), (426, 553)])

# predict

detector.args.flag.show_steps = True

ret = detector.detect_and_show_inner_grips(rgbd=rgbd, net_args=detector.args.inner_grip_net, args=detector.args,

remove_bg=detector.args.inner_grip_net.remove_bg)

# show

cv2.imshow('reviewer', ret['im'][:, :, ::-1])

cv2.waitKey()

5. Parameters tuning

Parameter |

Description |

grip_net.erode_h |

gripper plate’s width |

grip_net.grip_w_ranges |

ranges of gripper’s width, which are tuned for each target object |

grip_net.grad_thresh |

gradient threshold. Smaller value makes more grip candidates but noisy, and vice versa |

grip_net.remove_bg |

remove detected grips on the background or not |

grip_net.top_n |

top n target grips |

grip_net.dy |

Smaller value makes more dense grip candidates. |

grip_net.ellipse_axes |

Dimensions of ellipse that examines neighbor grips. For bigger object, dimension should be larger |

inner_grip_net.score_thresh |

Score threshold of inner grip detector |

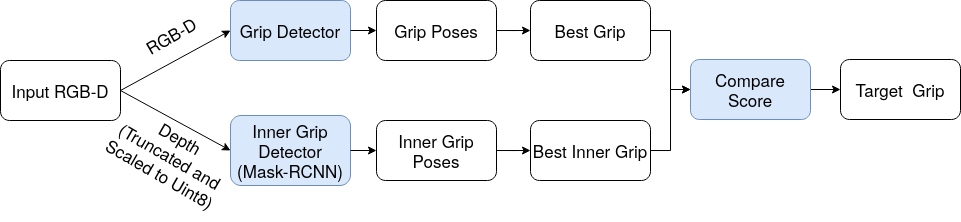

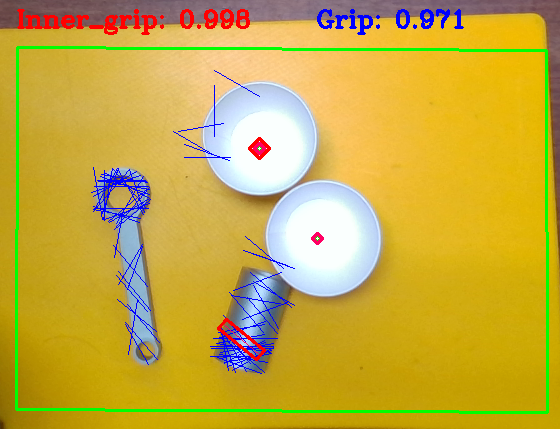

Inner and Outer Grip Detection

1. Output format

Field |

Description |

grip |

num_grip x 10 nd.array where a grip g=[xc,yc,zc,W, H, x0, y0, x1, y1, score]. (x0,y0) and (x1, y1) are 2 endpoints of the grip |

inner_grip |

num_grip x 4 where a grip g=[xc, yc, zc, w, score] |

best_ind |

best grip index of grips |

best_inner_ind |

best grip index of inner grips |

best_n_inds |

top n indexes of grips |

best_n_inner_inds |

top n indexes of inner grips |

target |

target grip selected from all grips and inner grips |

target_name |

name of target grip [grip/inner_grip] |

im |

display grip on the input image |

2. Create and Load Detector

Using the default Kpick’s detector

from kpick.pick.grip_inner_outer import get_inner_outer_grip_detector_obj detector = get_inner_outer_grip_detector_obj()(cfg_path=cfg_path)

Note

Please refer configs/inner_outer_grip.cfg

3. Modify and Load Detector

Extending the default Kpick’s detector

from kpick.pick.grip_inner_outer import get_inner_outer_grip_detector_obj, InnerOuterGripDetector class AppDetector(InnerOuterGripDetector): def new_function(self): print('new function') detector = get_inner_outer_grip_detector_obj(Detector=AppDetector)(cfg_path=cfg_path)

4. Demo on single RGB-D image

import cv2

from ketisdk.vision.utils.rgbd_utils_v2 import RGBD

# load model

detector = get_inner_outer_grip_detector_obj()(cfg_path='configs/inner_outer_grip.cfg')

# load image

rgb = cv2.imread('data/test_images/inner_outer_grip_rgb.png')[:, :, ::-1]

depth = cv2.imread('data/test_images/inner_outer_grip_depth.png', cv2.IMREAD_UNCHANGED)

rgbd = RGBD(rgb=rgb, depth=depth, depth_min=600, depth_max=800,

denoise_ksize=detector.args.sensor.denoise_ksize)

rgbd.set_workspace(pts=[(360, 270), (889, 273), (890, 635), (359, 632)])

# predict

detector.args.flag.show_steps = True

ret = detector.detect_and_show(rgbd=rgbd)

# show

cv2.imshow('reviewer', ret['im'][:, :, ::-1])

cv2.waitKey()

5. Parameters tuning

Parameter |

Description |

grip_net.erode_h |

gripper plate’s width |

grip_net.grip_w_ranges |

ranges of gripper’s width, which are tuned for each target object |

grip_net.grad_thresh |

gradient threshold. Smaller value makes more grip candidates but noisy, and vice versa |

grip_net.remove_bg |

remove detected grips on the background or not |

grip_net.top_n |

top n target grips |

grip_net.dy |

Smaller value makes more dense grip candidates. |

grip_net.ellipse_axes |

Dimensions of ellipse that examines neighbor grips. For bigger object, dimension should be larger |

inner_grip_net.score_thresh |

Score threshold of inner grip detector |

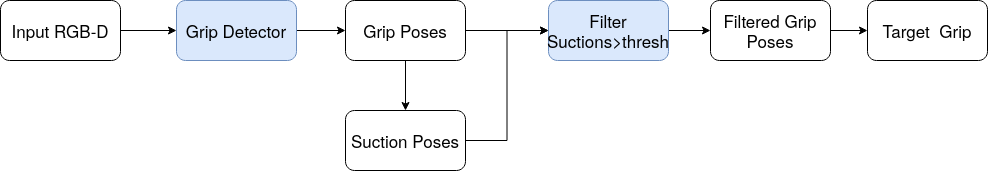

Hybrid Grasp Detection

1. Output format

Field |

Description |

grip |

num_grip x 10 nd.array where a grip g=[xc,yc,zc,W, H, x0, y0, x1, y1, score]. (x0,y0) and (x1, y1) are 2 endpoints of the grip |

best_ind |

best grip index of grips |

best_n_inds |

top n indexes of grips |

im |

display grip on the input image |

2. Create and Load Detector

Using the default Kpick’s detector

from kpick.pick.hybrid_grasp import get_hybrid_grasp_detector_obj detector = get_hybrid_grasp_detector_obj()(cfg_path=cfg_path)

Note

Please refer configs/hybrid_grasp.cfg

3. Modify and Load Detector

Extending the default Kpick’s detector

from kpick.pick.hybrid_grasp import get_hybrid_grasp_detector_obj, HybridGraspDetector class AppDetector(HybridGraspDetector): def new_function(self): print('new function') detector = get_hybrid_grasp_detector_obj(Detector=AppDetector)(cfg_path=cfg_path)

4. Demo on single RGB-D image

import cv2

from ketisdk.vision.utils.rgbd_utils_v2 import RGBD

# load model

detector = get_hybrid_grasp_detector_obj()(cfg_path='configs/hybrid_grasp.cfg')

# load image

rgb = cv2.imread('data/test_images/hybrid_grasp_rgb.png')[:, :, ::-1]

depth = cv2.imread('data/test_images/hybrid_grasp_depth.png', cv2.IMREAD_UNCHANGED)

rgbd = RGBD(rgb=rgb, depth=depth, depth_min=400, depth_max=600,

denoise_ksize=detector.args.sensor.denoise_ksize)

rgbd.set_workspace(pts=[(360, 270), (889, 273), (890, 635), (359, 632)])

# predict

detector.args.flag.show_steps = True

ret = detector.detect_and_show_hybrid(rgbd=rgbd)

# show

cv2.imshow('reviewer', ret['im'][:, :, ::-1])

cv2.waitKey()

5. Parameters tuning

Parameter |

Description |

grip_net.erode_h |

gripper plate’s width |

grip_net.grip_w_ranges |

ranges of gripper’s width, which are tuned for each target object |

grip_net.grad_thresh |

gradient threshold. Smaller value makes more grip candidates but noisy, and vice versa |

grip_net.remove_bg |

remove detected grips on the background or not |

grip_net.top_n |

top n target grips |

grip_net.dy |

Smaller value makes more dense grip candidates. |

grip_net.ellipse_axes |

Dimensions of ellipse that examines neighbor grips. For bigger object, dimension should be larger |

suction_net.pad_sizes |

Patch sizes of suction pose scanning process. Larger value should be set for larger object |

suction_net.stride |

Stride of suction scanning process. Smaller value makes more suction candidates, but computational heavier. |

rpn.enable |

Enable RPN for suction detector or not |

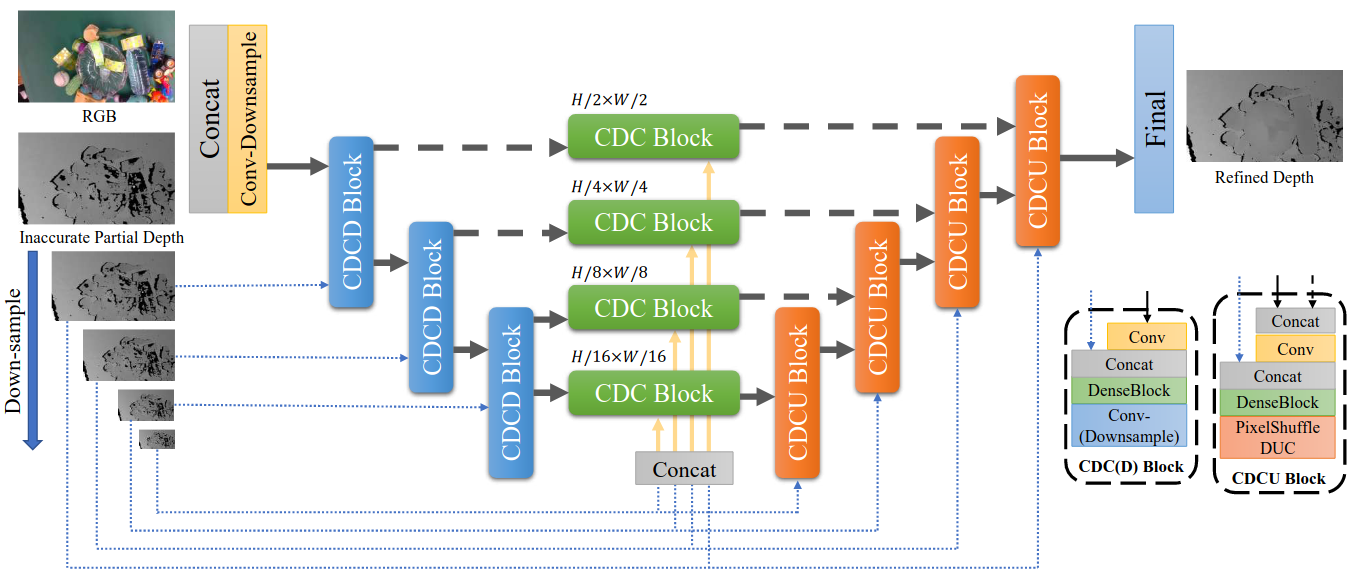

Depth completion of transparent objects

1. Testing on existing solution

Model: TransCG: A Large-Scale Real-World Dataset for Transparent Object Depth Completion and A Grasping Baseline

Input RGB and depth imgaes

Output depth